Reading @dan_hattrup's ideas on More Things to Measure in Cx, last week got me thinking. There are a lot of ways customers can experience a "failure" of service or experience. Whole chapters, even books, could be written on how to know when you’ve got a failure of customer experience. Determining THAT there was a Cx failure is difficult enough.

Reading @dan_hattrup's ideas on More Things to Measure in Cx, last week got me thinking. There are a lot of ways customers can experience a "failure" of service or experience. Whole chapters, even books, could be written on how to know when you’ve got a failure of customer experience. Determining THAT there was a Cx failure is difficult enough.

But let’s say we could see individual Cx failures. How would we ever get to a point where we can notice where the most attention needs to be spent to address them? How do we notice those signals and differentiate them from random noise? We have so many ways of interacting with our customers, so many channels, so many physical locations (potentially), and on so many topics, how would we ever figure out the common denominators?

What if we could detect what's common in the failure points? What combination of segment, purchase type, date, channel, etc. is common to the failure point? Well, we could direct our resources to investigate those failures. We'd be searching for root cause in a much more contained place. Then we'd be able to correct that root cause before the issue spread to other customers, ultimately preserving or protecting those customers from that same failure.

Signal detection in small data

If we had perfect data, and only one or two customers, we could investigate and systematically hunt down the root cause for every issue, and spot when there were emerging new issues with our products, our services, our processes. We could monitor the experience every customer was having and intervene on their behalf. Especially in this digital world…the digital breadcrumbs of their experience through our digital system would indicate whether they were having a positive or negative experience.

Or, what if we only sold one product, with only one variety, flavor, or configuration, and only one way to purchase, yet had many, many customers. Again, we could easily track, search, and prevent issues from spreading.

Signal detection in big data

But what about when we have both: many flavors, configurations, suppliers, locations, types of purchasing channels, and many, many customers. What if millions of customers were experiencing millions of these events, continuously? How would we monitor if there was some underlying issue causing a subset of these events? Then we would have a problem that is very complex. Or at least very big.

As an example, let's say we produce a line of consumer electronics, and know that our customers' experiences are affected by:

- The purchase experience; the payment process within the purchase experience

- The first use of the product, and how easy it was

- The first call to the call center if something happens to their product or associated service

- A visit to the repair center (if something breaks)

- The resolution and follow-up of the issue

Now, if we are looking to monitor signals of Cx failure we have a lot of factors to monitor (in data science we call these features):

- Customer demographic factors

- Customer tenure (how long they’ve been with us)

- Product/model purchased; what configuration/model

- How and through what channel the product was purchased

- Type of payment / process followed

- Product usage behavior (heavy? light?)

- …and many, many more

Big data signal detection explodes in size

Yes, this problem explodes in size as the number of attributes or features or factors add up! Especially if we want to determine, say, commonalities within types of customers, their geographic location, how they choose to interact, which product / product configuration, and how they chose to pay! To show how quickly this gets ugly:

Let's say we could boil this down to a few important features and dimensions. (Forget for now, the dozens of payment processes or types, or usage behavior profiles):

- 50 customer segments/types,

- 12 purchase channels,

- 1000 locations,

- 20 products/models.

12 Million combinations, with just these four dimensions (that’s 50 X 12 X 1000 x 20 = 12 Million). That’s 12 Million dashboards to check trend lines on at all times!

A real world problem requires much more dimensionality, and many more features than this. Quickly you can see that we would be dealing with hundreds of millions, perhaps billions of combinations of factors of commonalities. It's a virtual heap of data, and the data changes every hour, every day. How do we detect the commonalities in what Cx failures are occurring, so we can direct resources to those emerging problems?

How do we find the signal in the noise? The needle in the haystack? Especially when the haystack is the exploding dimensions problem creating millions, perhaps billions of straws in the haystack. Stay tuned…..answer next week!

Part B:

In my posting last week I set up the Haystack problem...you want to use basic SPC, or trend charts, something easy to do, right? But pretty soon, as I described, you're monitoring hundreds, thousands, perhaps millions of charts, if you consider all the possible combinations of where the signal is occurring. No human eye can do this!

But first, why do we refer to this as "a signal"?

Signals are the Needles in the Haystack

If something has no specific or underlying cause, we expect it to occur randomly....some small amount of noise-level occurrence. For example, every once in a while, my calls are dropped, and I cannot spot any pattern with them dropping, and it seems to occur randomly. That's noise. Now, if there is a cause, something that's affecting the outcome...For example, I used to drive home from downtown Los Angeles to Orange county on the 60 freeway frequently. I started noticing my calls would get dropped with an alarming frequency. Once I paid attention, I realized it nearly always happened at the moment I passed a certain exit. THAT'S A SIGNAL.

So far we have a "proximate cause"...that is, I noticed the conditions, location, time of day, etc. where the signal occurred.

Notice I didn't yet know what the root cause was, but a few searches online and I discovered my wireless carrier was performing a tower-to-tower handoff at precisely that point in the journey. Of course, that's not a smoking gun yet, but pretty likely as not, that cell tower handoff was my ROOT CAUSE.

Now, imagine my carrier's problem....it's not just me, it's their entire install base experiencing random drops and some non-random drops. Cx failures, across all the different types of mobile phones, geo-locations, time of day, call traffic behavior, and many other relevant conditions, factors, features. They have the proverbial needle in the haystack problem I described in my post last week. How do they monitor all the different combinations they have to find the signal in the haystack?

Calculating Signal to Noise

In theory, it's easy: you take the number of Cx failures in a sub-population of the overall. For ease, let’s call the sub-population a “slice”, or a “feature vector”. Each slice contains those customers who shared only those factors/features. Then, compare it to the total number of customers in the slice. Divide the first by the second; then normalize it. Ta-Da! You get a number between 0 and 1, with 1 being a very high signal, 0 being a very low signal. You look for strong signals. The calculation might be easy. The scale is hard over millions of slices.

Why is big data signal detection so hard?

The feature data are, if you're lucky, stored in some large data repository. The feature data for a large population, effectively allows you to separate it into those multi-dimensional sub-populations that we called slices. And because there are likely millions of these, that alone is some effort to maintain. Additionally, the features associated with each of the slices need to be updated in near real time, as new feature data arrive. So you have a data management task there. Signal detection is a real-time problem, not a "look back over the last year" problem.

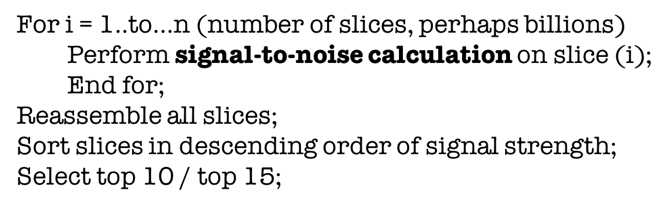

Then, you need to do the calculation for each slice, every time new data arrives. How most attempt this problem is to select the slice or sub-population of data, offloading them to some other platform one-at-a-time, sequentially, and attack the problem procedurally:

…those will be your strongest signals

If you are moving these slices from one place to another to do this calculation, sequentially, you find out there are not enough hours in the day, not enough days in the year to do near real-time signal detection. By the time you've finished the do loop, new data has already arrived, and your signal detection is out of date.

Reframe the problem: Make it embarrassingly parallel

Have you heard of the concept of an embarrassingly parallel problem? Per Wikipedia,

...an embarrassingly parallel workload or problem (also called perfectly parallel or pleasingly parallel) is one where little or no effort is needed to separate the problem into a number of parallel tasks. [1] This is often the case where there is little or no dependency or need for communication between those parallel tasks, or for results between them. [2]

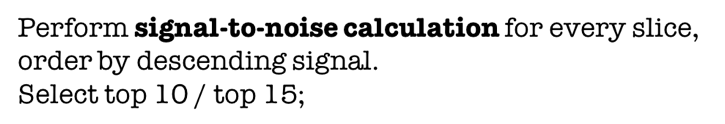

To reframe as an embarrassingly parallel problem, we simply reframe the analytic problem into many sub problems that are separate. Then, perform all the calculations to each slice simultaneously, non-procedurally, non-linearly….essentially:

This problem scales linearly, assuming you have a platform that can scale linearly with embarrassingly parallel problems AND, assuming your analyst, data scientist, engineer, knows how to think in set theory terms.

The payoff, of course, is obvious. If you can conquer this big data problem, turn it into an embarrassingly parallel problem that can be managed in real time, you can ferret out, fix issues, avoid their spreading, you prevent damage to your customers' experience with your product, your service, and your brand.