White vinegar is a great substance when you're cleaning windows. But amazingly, white vinegar can also keep flowers fresh and act as a fabric softener when you're washing clothes. Taking something that works well in one place and applying it creatively somewhere else has been a winning formula for Teradata over the years. Teradata's hashing algorithm is one of my favorite examples of successful repurposing within the

Advanced SQL Engine.

What is the Hashing Algorithm?

The hashing algorithm is a piece code that acts like a translation table. You give it an input string, such as a row’s primary index value, and it chews that value up and transforms it into something different, such as an ID to identify a row on disk. One advantage of using Teradata's hashing algorithm is that it is designed to smooth out lumpy values and reduce skew, which is important in a parallel database.

Teradata's hashing algorithm has another big advantage. The output value it produces, referred to as a row hash, is designed to be a consistent length for all input values -- 32 bits. Whether the input is a combination of different column values, comes from differently-sized values from a variable length column or is composed of different data types, the output of the hashing algorithm will always be a fixed size and format.

Dealing with uniformly-sized row identifiers simplifies the effort made by the database when storing, sorting, aggregating and joining rows. This is like managing money using one common currency, rather than having to handle rupees, dollars, euros and yens all at the same time.

Let’s consider some of the ways hashing is utilized in the database.

Storing a Row on Disk

The unit of parallelism in the Advanced SQL Engine is referred to as an AMP. Performance is generally better when the rows of a table can be stored evenly across all the AMPs in the configuration, so each AMP is doing a similar level of work when the table is being processed.

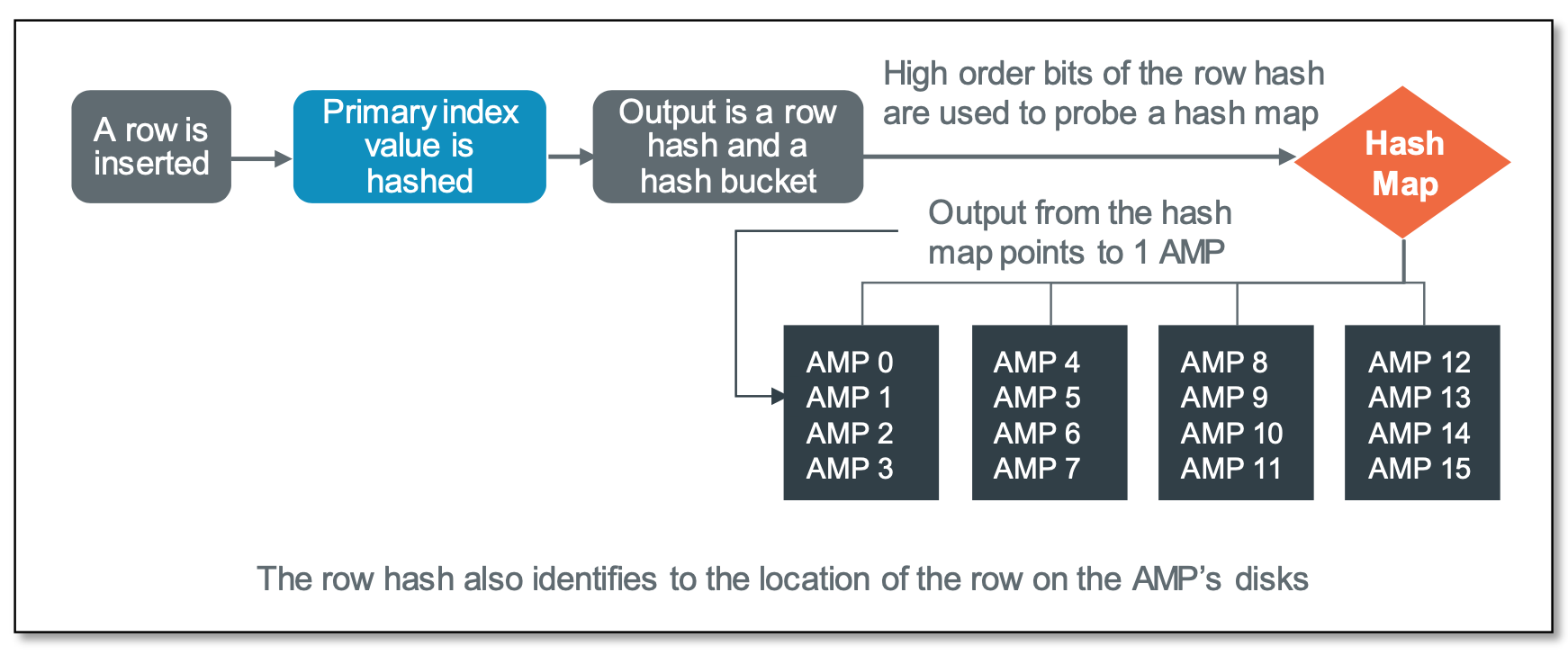

When a row is stored in the database, the hashing algorithm is used to determine which AMP will own that row, and the location of that row on that AMP’s disks. The primary index value of each row becomes the input into the hashing algorithm. When a row is accessed directly based on its primary index value, the same hashing algorithm is used to direct the access activity to the correct AMP, as illustrated in the figure below.

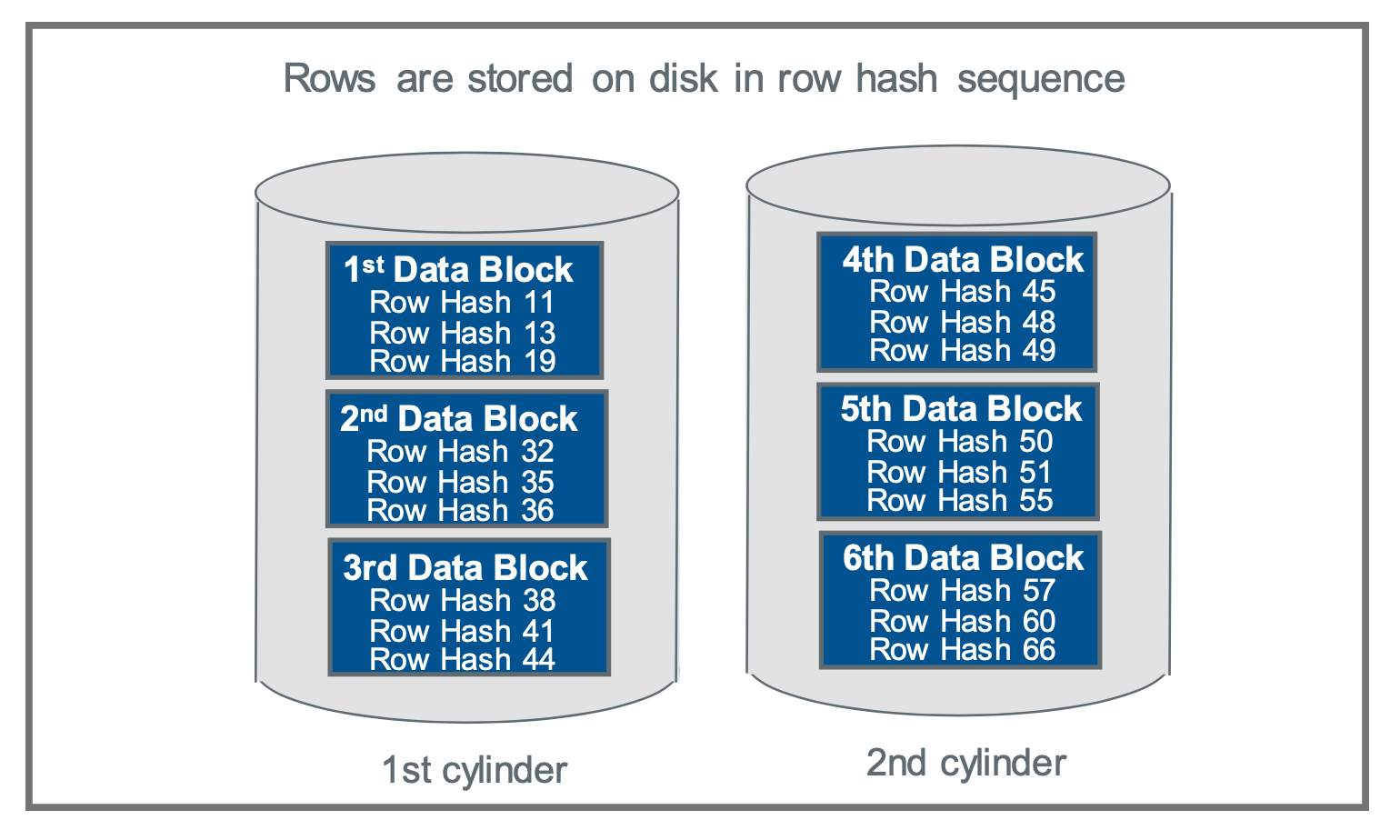

Once a row arrives on its assigned AMP, that same row hash value determines where on that AMP's disks the row will be stored. The row hash is used to sequence the rows on disk, so when a table is read, the data initially emerges in row hash order.

Joining rows

The same hashing algorithm plays a key role when tables in the database are joined. In this case, it is the join column values for each table participating in the join that will go through the hashing algorithm. Here’s how that works:

Each AMP is assigned its own memory, and consistent with its shared-nothing design, an AMP cannot share that memory with other AMPs. In order for a join to be performed, associated rows from both tables must be resident in the memory of the same AMP. If you want to have a meet-up with a friend you either have to travel to their house, they have to travel to your house or both of you will have to travel somewhere else, like a restaurant or a club. That is the same requirement when joining rows that are stored on different AMPs.

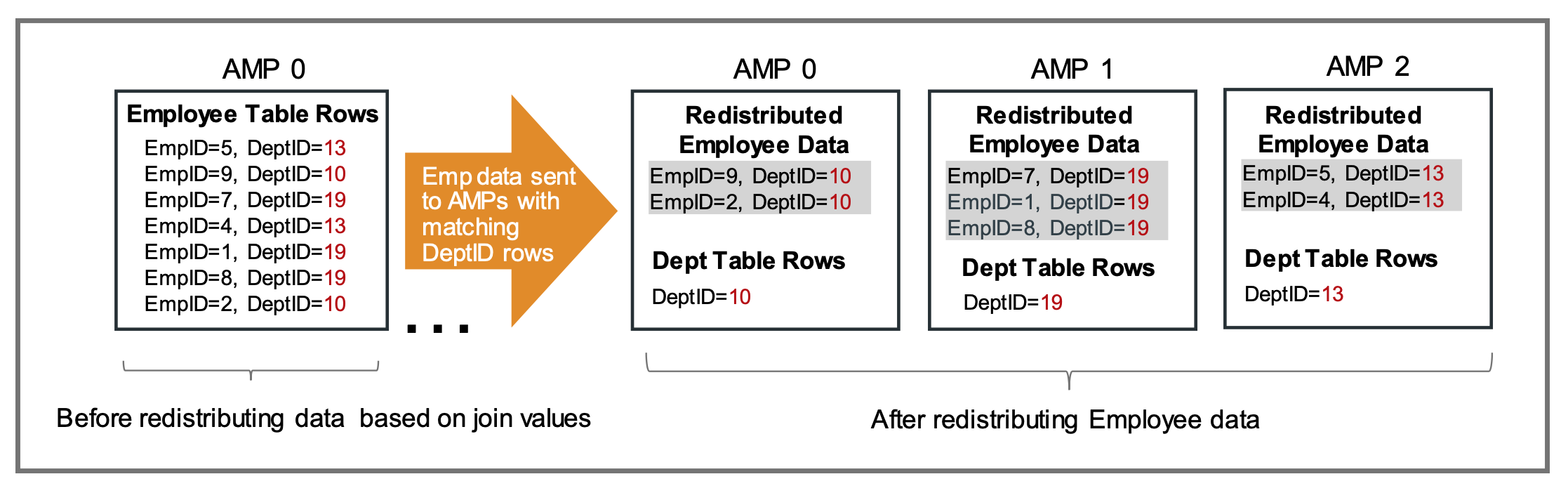

In preparation for a join, the database first moves the selected rows of each table in such a way that to-be-joined rows on both sides of the join end up on the same AMP. Assume you were joining an Employee table (with a primary index of EmpID) to a Department table (with a primary index of DeptID). Since the primary index domains are different between the two tables, you can expect the rows-to-be-joined to be found on different AMPs.

The hashing algorithm is the means of getting associated rows onto the same AMP. Because both the primary index and the join column of the Department table is the same (DeptID), Department rows are already positioned on the correct AMP for the join. Consequently, only the Employee rows need to be redistributed.

This happens in parallel across all AMPs. As each AMP sends its Employee rows to whichever AMP owns its associated Department row, each AMP receives Employee rows sent from other AMPs to join to Department rows that it owns at the same time. This is illustrated in the following figure.

Once table data has been redistributed to the AMP that owns the rows to which they will be joined, the redistributed rows are sorted in row hash sequence. In the graphic above, all Employee rows with a DeptID of 10 are redistributed to AMP 0 which is where the matching Department row with a DeptID of 10 resides. Sorting Employee table rows by row hash ensure they are in same sequence as the Department rows that are stored on that AMP. When both tables’ data are ordered in the same sequence, the join can take place effortlessly and quickly.

Global Aggregation

Yet another opportunity for using the same hashing algorithm is during the aggregation process. Assume that you have an end user query that counts the number of delinquent customers by the state of their residence. Due to what you have learned about how rows in the database are stored, you can expect that customer rows will be spread across all AMPs based on the table’s primary index: CustomerID.

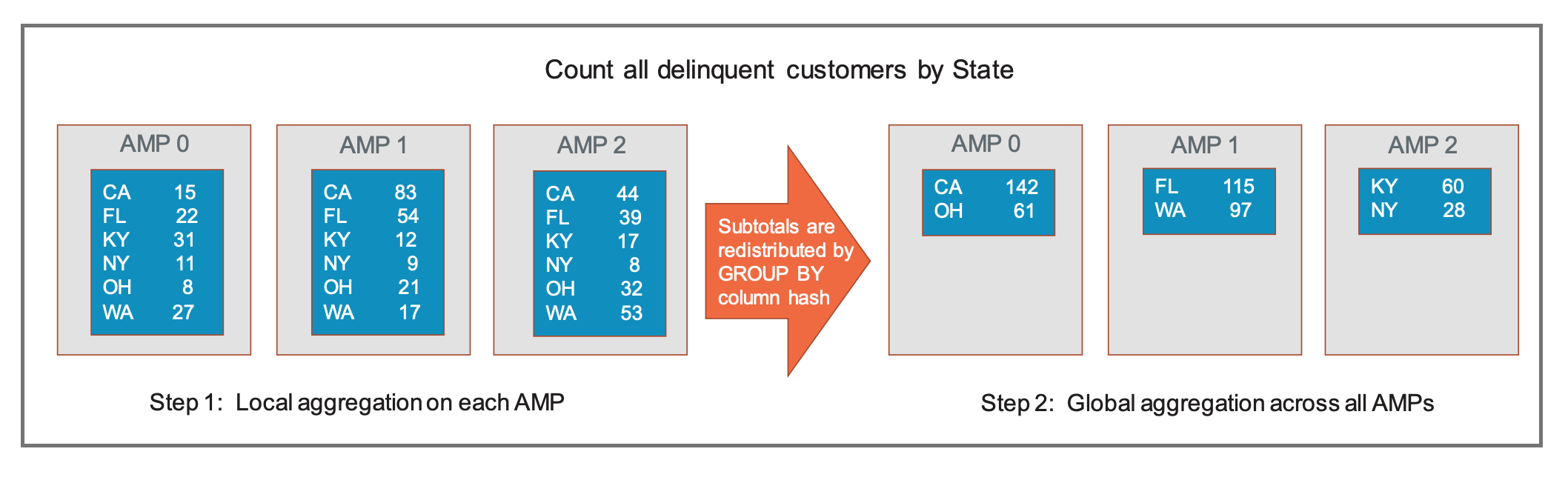

In order to perform this aggregation efficiently, each AMP will first read its local customer rows. Then each AMP, in parallel, will perform a local aggregation to produce its own count of delinquent customers by state, as shown in the left side of the graphic below. Once the local aggregations are complete, a grand total will have to be performed.

Instead of bringing all of the subtotals together on a single AMP in order to do the grand total, the aggregation process shares the work involved in doing that work with all AMPs in the configuration. The work of global aggregation is divvied up across AMPs by relying on the hashing algorithm. The GROUP BY values (such as CA, NY, OH) on each AMP are sent through the hashing algorithm. The output of the hashing algorithm points to one AMP for each different state value. The local subtotals are then sent to whichever AMP owns each particular GROUP BY value. This is illustrated on the right side of the following graphic.

Each AMP performs its part of the grand total in parallel with other AMPs, spreading the effort across the memory of all AMPs and reducing the time involved in doing a large aggregation. Then the

BYNET interconnect reads those grand totals and sorts and merges them, returning the answer set.

So, while it won't keep your flowers fresh or your windows clean, Teradata's hashing algorithm provides a big performance boost for complex or analytical queries running in Advanced SQL Engine -- whether scanning a table, joining two tables together or producing complex aggregations.