As I explained in the first of this 3-part blog series, following the collapse of Carillion, we started a project to see if, using open government data and analytics techniques, we could help government organisations manage risks associated with outsourcing.

In the first blog post I outlined our approach to working out the right things to measure and how we got the spend and contract data we needed to do that. In this post I will focus on how we analysed the data and what our findings could mean for government’s approach to procuring the services of outsourcers and its use of analytics at scale.

Finding insights: Analysing and making sense of the data

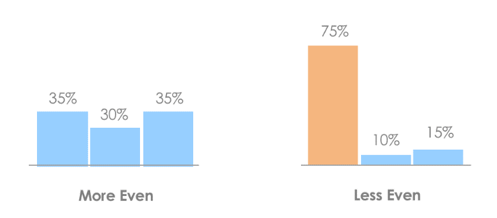

The most obvious way for government organisations to minimise the impact of one or more of their outsourcers going bust is to spread their spending across their outsourcing partners as equally as possible. Let’s call this evenness.

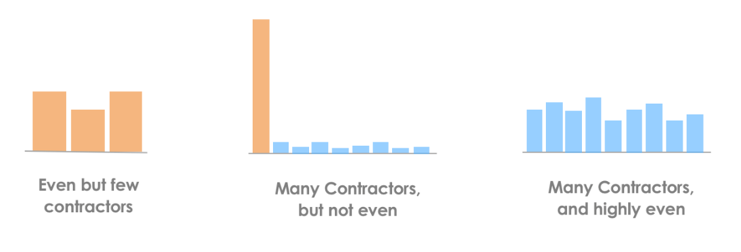

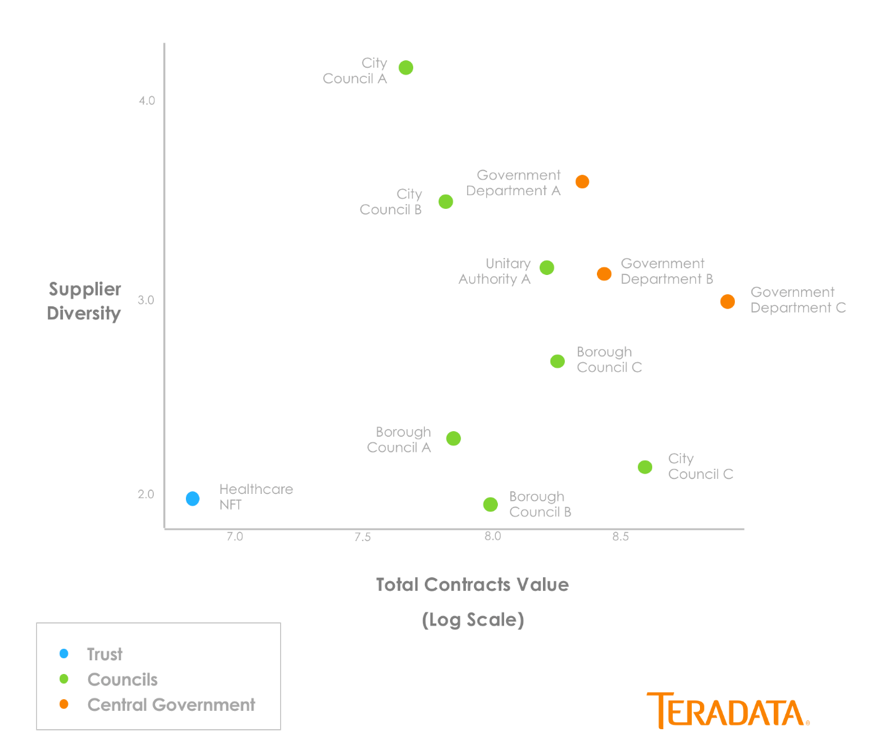

The more outsourcers there are, the further diluted the risk will be. By this reasoning, the most desirable and least risky scenario is one where the outsourcing budget is evenly spread across several contractors. We combined these two characteristics and expressed the result as a single value that we shall refer to as diversity.

In summary, our hypotheses were that (1) it was possible, given the data we had, to assess the evenness and diversity of a government organisation’s spending and (2) that doing so would give us a useful basis for assessing how well it could cope with supplier failure.

In summary, our hypotheses were that (1) it was possible, given the data we had, to assess the evenness and diversity of a government organisation’s spending and (2) that doing so would give us a useful basis for assessing how well it could cope with supplier failure.

Doing both these things reliably required large volumes of data which ruled out a manual approach, so we built an analytics pipeline that helped us automate the ingestion, processing and analysis of the data.

Headline description of our sample dataset

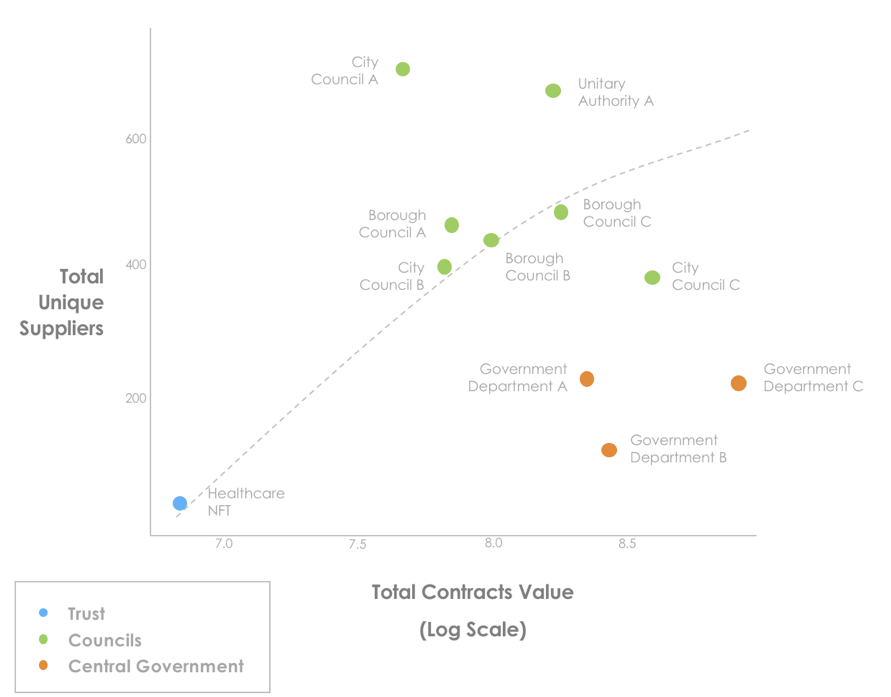

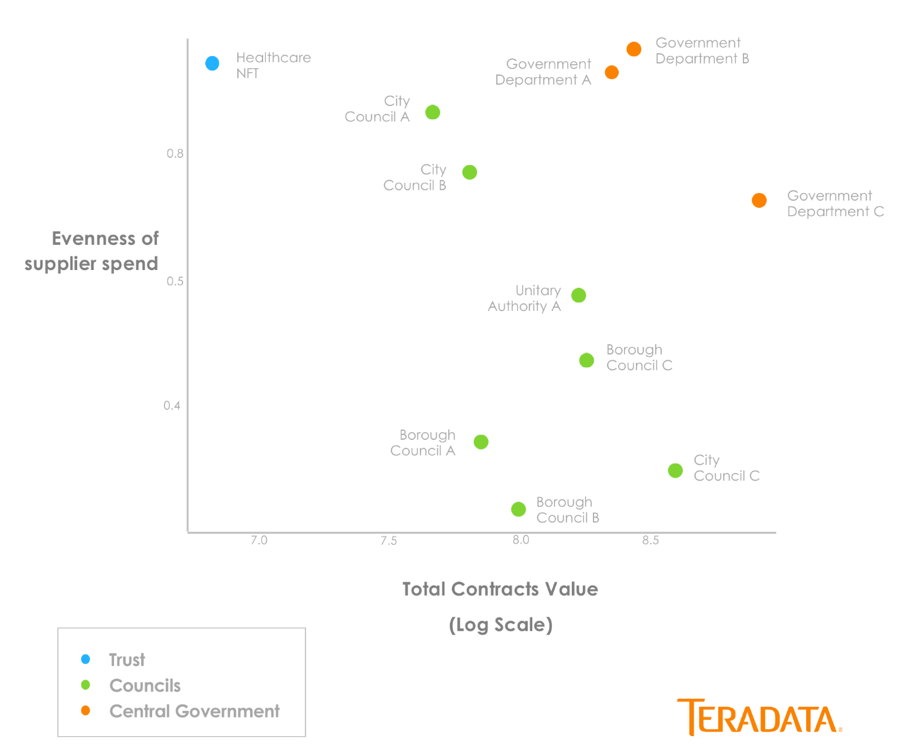

Spend Network gave us a sample dataset of 11 government organisations. It was a relatively small sample but it was varied enough in terms of organisation type, budget size (£6.9 million to £781.3 million) and the extent of their use of outsourcers (from as few as 18 to 701) for us to glean useful insights and answer the questions I outlined above.

As I explained in the first blog post, Spend Network classifies its aggregated data which makes analysis easier. We focused on government spending with multiservice outsourcers. We did this by filtering the dataset on services that are usually provided by large multiservice outsourcers. By our estimation, their services fit about 28 different categories. The top 3 categories, which account for 81% of monies spent with these outsourcers, were:

- ‘Information technology consultation services’ (35%)

- ‘Management and Business professionals and Administrative services’ (29%)

- ‘Building and Facility Construction and maintenance services’ (17%)

We decided to exclude Information technology consultation services category because there was the only multiservice outsourcer (Capita) offering services in this category. We decided to focus our analyses on the latter top 2 because the other 25 categories altogether accounted for less than 20% of government spend with outsourcers.

The 11 government organisations in our sample varied widely with regards to evenness and the number of outsourcers they did business with, over the 6-year period of 2011 to 2016.

These visual representations of the departments’ spending profiles give us a level of insight, but they’re too high-level for us to reasonably assess how well any of them might cope with supplier failure. That’s the focus of the third and final blog post in the series.

Ade is a Senior Consultant at Teradata, with a focus on Public Sector organisations and their effective use of data. Prior to her role at Teradata, Ade worked at the Government Digital Service (GDS), the organisation created to drive the transformation of UK government content and services through better use of digital technology and data transformation, based in the centre of Government. Ade spent 7 years working across a number of policy, strategy and delivery roles. She went on to lead the implementation of one of its core elements – common data infrastructure- in her role as Head of Data Infrastructure. In this role Ade focused on understanding the needs of users (within and outside) of government data and building a range of data products designed to meet those needs.

Prior to GDS, Ade worked at Nortel, a telecoms equipment vendor. While at Nortel, Ade worked in a number of roles and across a number of areas including product acceptance testing, sales and design and representing Nortel at international technical standards bodies such as 3GPP, IETF etc. These roles gave her a really good understanding of clients’ business needs as well as the commercial and strategic drivers for them, and the value of the right technology for meeting those needs.

View all posts by Ade Adewunmi