Engineers and scientists are among the most technically skilled and creative assets in any organization. However, they are often constrained by access to data they want and need. Data that is tied to a single system or application is an unnecessary risk and limits the ability of scientists and engineers to develop valuable analytics.

Whereas data siloes in most sectors are bridgeable through traditional warehousing strategies, industrial data siloes exist for much more complex reasons that are tied to operations and often represent clear and present risk to life, health, property (real or intellectual), and the environment. For these reasons, we find that industrial users are strongly tied to applications that encapsulate technical data, advanced mathematics and simulations, and mission-critical workflows. This is represented in the Purdue Reference Model shown below.

.png)

In most cases, data from level 2 resides exclusively in level 2 and is the reason that PI data is rarely fully integrated with other systems of record. These siloes are difficult to mitigate without significant subject matter expertise and meticulous attention to management of change.

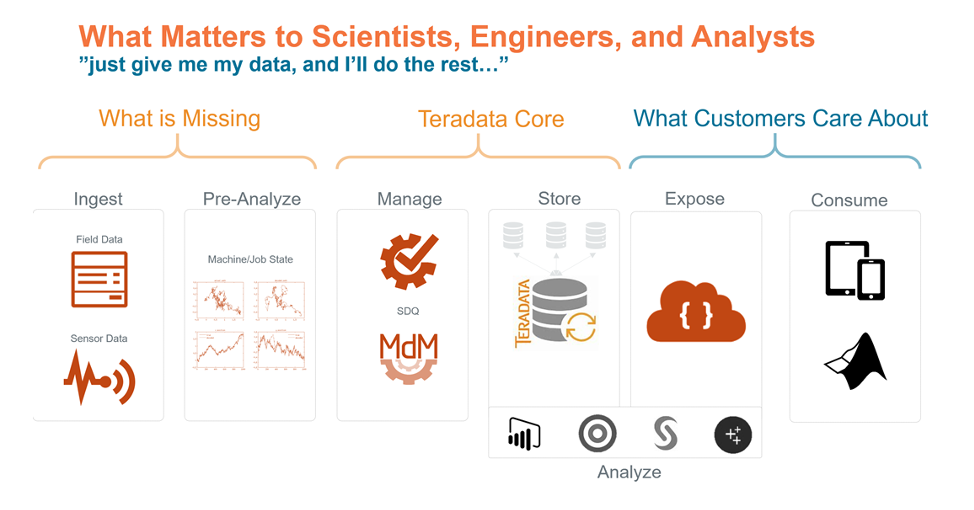

Additionally, more than other sectors, the industrial sector is home to “citizen data scientists.” The vast majority of employees have strong backgrounds in mathematics and statistics and can code at intermediate levels or better. Access to cheap compute and storage, even at the desktop level, has amplified the clarion call that repeats “…just give me my data and I’ll do the rest.”

This is not to say that access to data is a panacea. Unfettered access to data presents its own problems; among those are:

- Users of extensive time-series sensor data sets are constrained by local compute resources;

- Lack of parallelism makes computations inefficient;

- Inefficient or impossible joins at scale limit analysis to a single set;

- Productionization of their code is often not a primary goal of scientists and engineers.

In a nutshell, engineers and scientists can code the mathematics to solve their problems but often cannot effectively deploy them at the scale or speed of their process. The opportunity is, therefore, to provide a set of solutions that:

- Ingests sensor data in real time or near real time;

- Advanced data modeling of “dirty” sensor data for science and engineering purposes;

- Integration of Level 2-5 for true analytics;

- Abstracts applications from data, allowing engineers to choose best-of-breed solutions that aren’t reliant on bespoke integrations.

By providing these four capabilities, we unleash the creative potential of scientists and engineers and allow them to focus on what is most important—continuously improving safety, productivity, and quality of life.

****

See more information about

Teradata Vantage for Oil & Gas on our website. Learn more about

Teradata Vantage. If you would to setup a demo please

contact us.

Nathan Zenero holds a Bachelors Degree of Science in Petroleum Engineering from Texas A&M University and a Lean/Six-Sigma Certification from the University of Oklahoma. As a drilling engineer for Chesapeake, he was responsible for evaluation and implementation of new technology with a special focus on quality systems, drilling optimization, automation, big data, and real-time systems.

At Chesapeake, he performed pioneering work, from 2013 to 2015, in the field of operational data quality in support of over 200 drilling and completions rigs. His work serves as a standard reference in the domain and was the catalyst for the Operators’ Group on Data Quality (OGDQ) which he founded and for which he served as president for two years. He is co-developer of the industry’s first iron roughneck makeup torque validation and calibration device and is widely recognized for the definitive study on topic (SPE 178776-MS). He is also co-developer of a high-accuracy BOP testing system and has personally developed and performed detailed, ISO compliant audits of nearly a dozen BOP testing providers.

Nathan has several patents pending in the fields of real-time operations process management and automated data collection. He is an expert in drilling data analytics and is a leader in the use of applied statistics, cased-based reasoning and big-data systems to improve real-time operations. He has published on many topics including automation, data quality, analytics, data science, blockchain, and emerging technologies.

Mr. Zenero has considerable experience in the design and implementation of drilling controls and automation; and, as a product line manager deployed upgrades to more than 75 rig controls systems. He also has applied experience in deep-water flow assurance, hydraulic fracturing, and reservoir simulation.

View all posts by Nathan Zenero