The cloud is a collection of virtual machines and storage hosted on physical hardware in a data center managed by software that is used to manually or automatically provision instances of virtual machines of different sizes based on demand.

Managing Elastic Demand in Vantage

Teradata has always had workload management dynamically allocating resources of different size based on demand. In the beginning as a priority scheduler and later evolving to Teradata Workload Management (TWM).

TWM is designed to proportionately allocate resources based on workload demand automatically. A workload is defined in terms of who is executing the query, query classification in terms of size and complexity, and the data sets being accessed. The “who” allows for the collection of usage by users in accounting logs for facilitating the allocation of costs to departments based on usage.

Whenever a query is submitted it is analyzed and automatically assigned to the appropriate workload definition such that similar or homogeneous queries would be executed under a common set of rules for predictable and efficient management of resources.

The efficiency of TWM allows for a pool of resources to be dynamically distributed to a mixture of complex workloads ranging from time-series integration with geospatial functions for 4D analytics through space and time to predictive analytics leveraging machine learning functions to prescriptive analytics to influence future results to seamless integration with cloud services to manage highly dynamic streaming ingestion and event-based triggers to enable an active data warehouse and more.

When you consider "cloud native" or "built for the cloud" options, you need to also consider that the cloud as we know it today is intended for intermittent processing where performance demand is infrequent and dynamic in which long term investments in compute and storage would otherwise be wasted with infrequent use and constant change of hardware. For enterprise workloads or the aggregation of many data mart workloads that are constantly active with high-performance demand you need efficient management of resources to optimize cost otherwise the unused resources of multiple clusters defeats the objective of cost efficiency. When comparing the efficiency and elasticity of resource allocation of Vantage to cloud native or "built for the cloud" data warehouses, it becomes clear how much more efficient and cost effective Vantage is for enterprise workloads.

Don't Pay For What You Don't Use

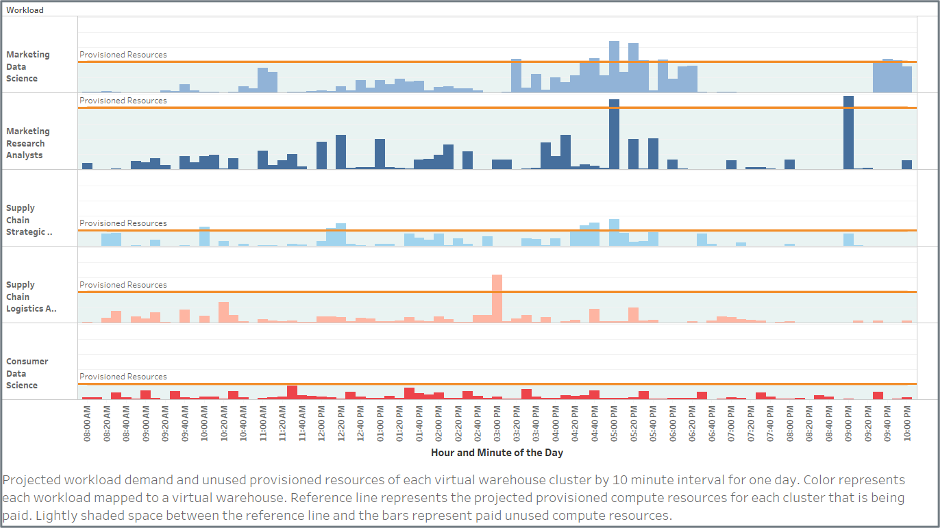

In a deployment of clusters for a cloud native data warehouse, each cluster is a physical container of resources. A query will only have access to resources within its own provisioned active cluster. Each cluster size must be projected to accommodate the peak or the majority of the peak demands of the workload. You may also need to trade-off in sizing clusters to impact infrequent high-demand periods that rise above the provisioned resources to queue up and wait.

While clusters may be suspended or turned off when not needed, when workload demand is 23 to 24 hours per day there is not much opportunity to save costs.

Workload demand will rise and fall during every minute of the day within the projected size of the cluster leaving unused resources. Those unused resources within each cluster are being paid for, and they cannot be distributed to another cluster that needs more resources. Instead you must pay additional costs for increasing the resources in that other cluster, multiplied by the number of all other warehouse clusters needing more resources multiplied by the number of auto-scaled clusters. This adds up very quickly compounding the costs of paying for what you don’t use.

Fortunately you can change the size of the cluster to accommodate the increase or decrease in demand, but this is a manual operation and is reactive. It would require constant monitoring to react to the changes. Changing the cluster size also does not impact or improve a currently running query so when reacting to a workload's demand that is exceeding the available resources in the cluster, the query or queries must be stopped and re-executed to utilize the additional resources of the larger cluster which will adversely affect the user experience.

Alternatively you can schedule size changes in specific shifts during the day for predictive demand. This is counterproductive for workload consumption that is varying unpredictably from day to day, and it would need to be repeated for all clusters.

When the workload demand decreases you are back to having provisioned unused resources that are being paid. Additionally if increasing the size of the cluster is exponential, meaning it doubles the previous size, you also risk provisioning way more than what is needed.

If we stacked the unused resources (lightly shaded) of each of the clustered workloads on top of the used resources (darkly shaded) of each of the clustered workloads for every hour you will see that you may be paying mostly for unused resources.

.png)

In Vantage the allocation of resources for each workload operates like a virtual container with no physical boundaries to available resources if it is not being used, yet flexible enough to apply limits if desired.

Vantage scales linearly such that scaling up or out increases performance, concurrency, workload throughput, etc. Since TWM allocates proportion of resources, whenever Vantage is scaled up or scaled out, then the resource allocations will also increase proportionally.

Each workload is assigned a proportional allocation from the total available resources in a provisioned warehouse. If a workload's demand does not need all of its allocation, the unclaimed resources may be borrowed by another workload whose demand is exceeding its allocation. This allows for the allocation of resources for each workload to expand and contract with demand without the need to provision more resources.

TWM operates within a single scalable warehouse so the resources are essentially stacked until all the workloads have the resources needed.

While there will be unused resources, what’s leftover is a fraction compared to the compounded waste in multiple provisioned clusters.

.png)

Teradata’s

Consumption Pricing Model is structured so that a customer only pays for what is used and only successful queries, not aborted queries or system related queries, so that fraction leftover is not even paid by the customer. Why pay for what you don’t use?

Conclusion

So as we have discovered the inefficiencies of clustered instances for enterprise workloads can compound costs and overrun budgets. The dynamic allocation of resources combined with a consumption pricing model and advanced analytic features makes Vantage a clear choice for cost efficiency of enterprise data warehouse workloads and delivering exceptional performance and capability to the business.

Teradata Workload Management may be considered "legacy" however this capability which has matured over the past few decades enables Vantage to be fully optimized for cloud and hybrid deployments and to efficiently deliver the lowest cost for enterprise analytics. So it seems that Vantage was born for the cloud before the cloud was born.

Pat Alvarado is Sr. Solution Architect with Teradata and senior member of the Institute of Electrical and Electronics Engineers (IEEE). Pat’s background originally started in hardware engineering and software engineering applying open source software for distributed UNIX servers and diskless workstations. Pat joined Teradata in 1989 providing technical education to hardware and software engineers, and building out the new software engineering environment for the migration of Teradata Database development from a proprietary operating system to UNIX and Linux in a massively parallel processing (MPP) architecture.

Pat presently provides thought leadership in Teradata and open source big data technologies in multiple deployment architectures such as public cloud, private cloud, on-premise, and hybrid.

Pat is also a member of the UCLA Extension Data Science Advisory Board and teaches on-line UCLA Extension courses on big data analytics and information management.

View all posts by Pat Alvarado