Have you ever thought about what can be done in a millisecond (ms)? A housefly can flap its wing in 3ms while a honeybee takes 5ms. A human however takes 300ms to blink their eye. Milliseconds are only 1/1000 of a second so they seem so insignificant. But it doesn’t take many of them to wreak havoc on cloud communication performance.

In an earlier

blog post I talked about the need to consider geography when developing your cloud architecture. Primarily that was because of network latency and its negative performance effect on the cloud Wide Area Network (WAN). In that article I jumped to the solution for the latency issues without explaining why. I think it’s insightful to know why because If you can master the why then you’ll be on your way to becoming a WAN performance expert.

I’ve been helping Teradata customers migrate their Vantage systems to the cloud for over three years and WAN performance is always a major concern. I have an electrical engineering degree and designed WAN network gear in the 1980s, but I always felt uncomfortable trying to diagnose cloud WAN performance issues. My standard response was to run to our resident network guru for help. Admittedly my network skills were rusty, but no amount of Internet research provided the whole picture I needed. It was only after a year of conversations with our network guru did I finally catch the simplicity of the issue that seemed so elusive. My goal here is to take that insight and present a simple explanation that will make you the master of cloud WAN communications.

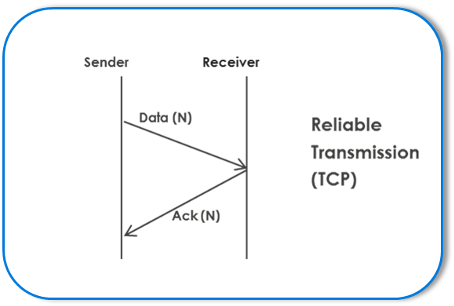

The explanation starts with an application’s need for reliable data transmission. Reliable transmission is achieved with network protocols that use data packet acknowledgements (Ack) as shown in Figure 1. The sender sends a data packet and then waits for the receiver’s Ack indicating the transmission was successful. The sender is idle for the total round-trip time while it waits for the receiver’s Ack. It is this idle time that kills network performance for single data streams if you have large network latency as most WANs do.

Figure 1 Reliable Data Transmission via Data Packet Acknowledgements

Figure 1 Reliable Data Transmission via Data Packet Acknowledgements

The key to understanding WAN performance is knowing there are two sets of Acks for application data transfers; the network layer and the application layer. The network layer is mostly TCP/IP, which of course is the protocol that runs the Internet. Internet research on WAN optimization almost exclusively talks about the network layer and TCP/IP. These sites are useful as they inform on how TCP/IP has windowing techniques that, though imperfect, will neutralize the WAN latency issue. It is because of this windowing function that we can assume the network layer is optimized and ignore these Acks for this discussion.

The second set of Acks occur at the application layer. Here we’re talking about protocols like ODBC, JDBC or the native database protocols that run over TCP/IP. My Internet research failed to turn up much information on these Acks or their effect on WAN communications. As it turns out these protocols do not have windowing techniques and therefore are very susceptible to WAN latency issues.

Let’s look at some examples with diagrams that for me finally brought the WAN performance picture in to focus. For Local Area Networks (LAN) the latency is usually less than 1 ms. Therefore, the wait time for data Acks is minor compared to the time it takes to send the data so there is little idle time on the network (Figure 2). Thus, for single data streams the LAN throughput is close to the network bandwidth.

.png) Figure 2: Large File Transfer with 1ms Latency

Figure 2: Large File Transfer with 1ms Latency

.png) Figure 3: Large File Transfer with 35ms Latency

Figure 3: Large File Transfer with 35ms Latency

But what happens when the latency increases to 35 ms, which is a modest WAN latency? Figure 3 shows the idle time has increased significantly and therefore the network throughput has plummeted for this single data stream. Many people think that increasing the bandwidth can counter act this issue, but that is not true. More bandwidth cannot counteract latency issues. The main way to fill idle time on the WAN is to run additional parallel data streams. It is easy to see that multiple streams like the one in Figure 3 would fill the idle time.

So even if your cloud WAN latency is quicker than the blink of an eye, you have the potential for performance issues. Therefore, it is critical when moving large amounts of data over the WAN to use applications that support parallel data streams. Vantage supports several methods of moving data using parallel data streams, which makes Vantage ideal for cloud computing.

Scott has 30+ years of experience in the information technology field, with 25+ years at Teradata. Scott has held many positions at Teradata including Professional Services Partner, Architectural Consultant, Data Warehouse consultant, Solution Architect (supporting Teradata clients) and is currently an Ecosystem Architect. Scott recently ran a Cloud Architecture Practice which helped customers migrate their Teradata solutions to the cloud.

View all posts by W. Scott Wearn